Technical SEO: Why is It Important?

Search engine optimization (SEO) at its most basic core, encompasses three categories: on-page, off-page, and technical SEO. On-page SEO prioritizes optimizing keywords and topics into a webpage to ensure the title, headings, subheadings, and body accurately describe and speak to the target topics in an effort to rank on search engines.

Off-page SEO deals with external efforts to increase a website’s authority and rank higher on search engines. It involves backlink building, content marketing, brand mentions, and social media marketing.

Technical SEO focuses on improving website speed, security, mobile-friendliness, and user experience. These effects are what search engine bots evaluate to determine if a website is accessible and navigable for web users to decide if it’s rank worthy on search engine ranking pages (SERPs).

What Is Technical SEO?

Technical SEO is the practice of improving your website’s visibility on SERPs by modifying technical aspects like sitemap, website architecture, and site speed. It forms the core of a website’s crawlability, performance, and indexation.

It increases the possibility of ranking online because, first, it makes it easy to crawl and index all your web pages. Secondly, mobile responsiveness and fast page speed signal to Google that your content addresses search intent and is easily accessible.

This means that no matter how valuable your content is, your web pages may remain hidden from your target audience if your website doesn’t check all the backend optimization boxes. This will reduce your business’s organic traffic, conversions, and potential revenue.

Let’s discuss the core pillars of technical SEO and how to improve yours.

1. Website Architecture

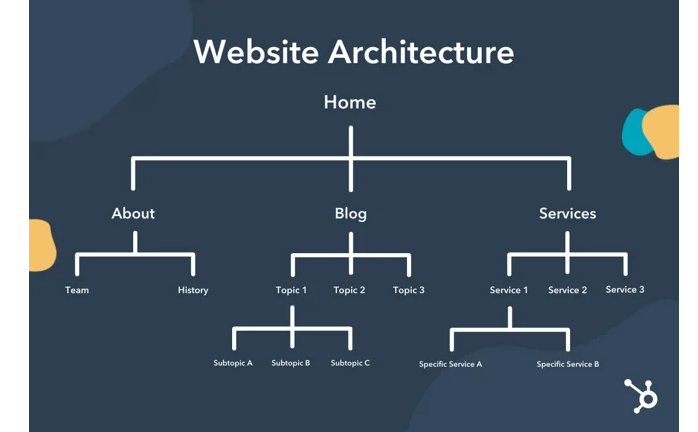

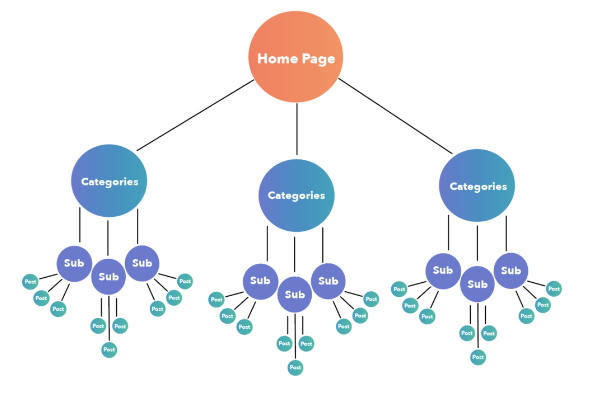

Website architecture is a structured organization of all the web pages on a website. It describes how connected one page is to another and the order in which the pages are ranked. See the image below for clarity:

A well-structured website allows users to explore your website easily. This means your pages are well interlinked, and users can easily navigate from one web page to another. This structured architecture increases user engagement and boosts conversions. It also allows search engines like Google and Bing to crawl your site easily, which is vital for prioritizing and ranking different pages.

For ease, this article will mention Google a lot to represent other search engines.

Google uses specialized robots called web crawlers to explore the internet and discover & index new pages. Web crawlers use internal links as navigational trails to index a website. Any web page that is not indexed has zero chance of getting ranked; this means you should link to other pages when you write your content. It also means you must keep your sitemap up-to-date and functional to make your pages crawlable and indexable.

The sitemap contains links to all the website pages you want search engine bots to crawl— you’ll learn more about this later.

2. Page Speed

Although content relevance to search intent is key to gaining visibility on Google, page speed is also an important ranking factor. Page speed is the time it takes for your website to load when a user clicks a link. According to Google, the bounce rate increases by 32% when the loading time exceeds two seconds.

“Bounce rate” is the percentage of visitors that leave a website after viewing a page. These visitors didn’t interact with other pages; they didn’t click another link, fill out a form, or buy anything. Sometimes, the bounce rate is also the percentage of those who go back to the SERP because your website took too long to load. And this is bad for your SEO.

Why? It shows your website isn’t preferred by web users, and it signals to Google that it probably doesn’t offer much value.

One way to reduce bounce rates is by ensuring your website loads fast. How? Start by using tools like TinyPNG or ImageOptim to compress image size without compromising quality. You should also optimize web fonts to limit the number of different fonts and font weights/styles. This reduces the quantity of content each page loads and increases delivery time.

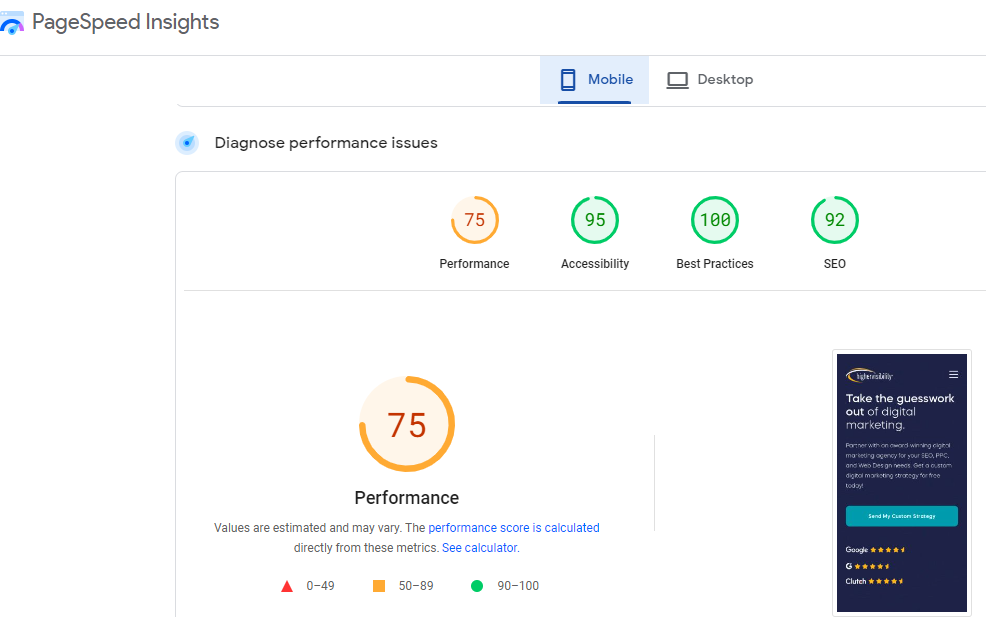

How do you know if your website is optimized to load fast? Google’s PageSpeed Insights is a free website speed and performance analysis tool. It offers diagnostics and prompts on how to solve any performance issues.

To provide a comprehensive user experience analysis, the tool analyzes your mobile and desktop versions. This way, you can spot current challenges with your user interface and improve the user experience for mobile and desktop users.

3. Mobile Optimization

55% of website traffic comes from mobile devices, and that’s because 92% of internet users access the web through their smartphones. Optimizing your website for mobile is a smart way to appeal to users, enhance their experience, and make navigation and conversion easier when they’re on your webpage.

Google’s latest approach to indexing and ranking web pages also prioritizes mobile experience. This mobile-first indexing method focuses on crawling, indexing, and ranking a site’s content using its mobile version.

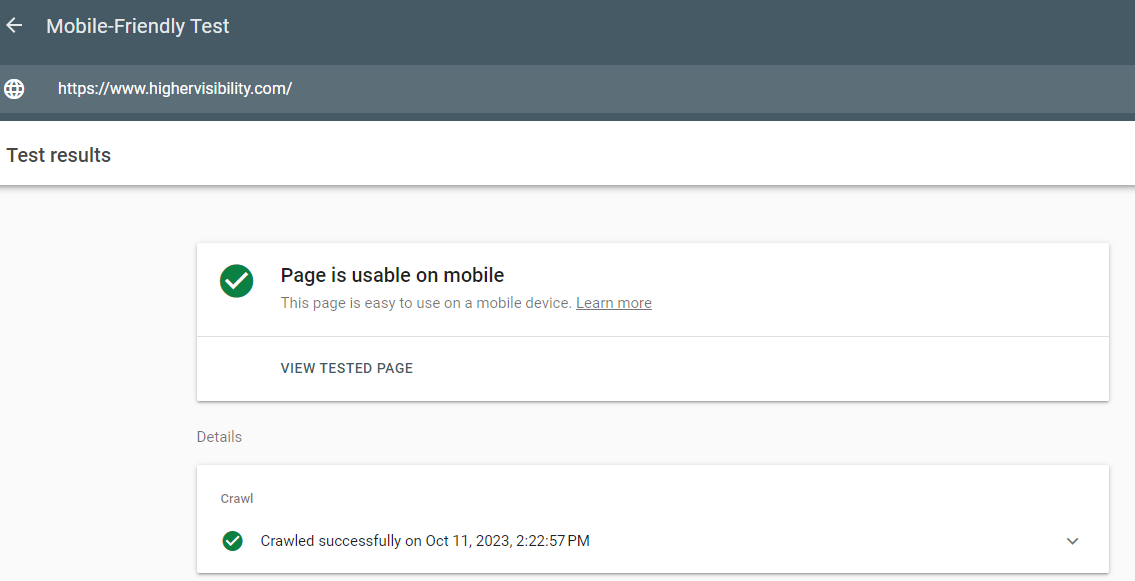

Take the Google Search Console’s Mobile-friendly test to check if your web page is mobile-friendly.

If the web page is mobile-friendly, you’ll get feedback that the URL you tested is usable on mobile. Just like this:

To optimize for mobile, implement a responsive design that adjusts to different screen sizes. You should also ensure all buttons and clickable elements are spaced and easily tapable. Another common strategy is using legible font sizes; this helps avoid the need to zoom in on a block of text before reading or interacting with your web pages. While these aren’t the only solutions, they improve user experience and your website’s accessibility and visibility.

4. HTTPS and Security

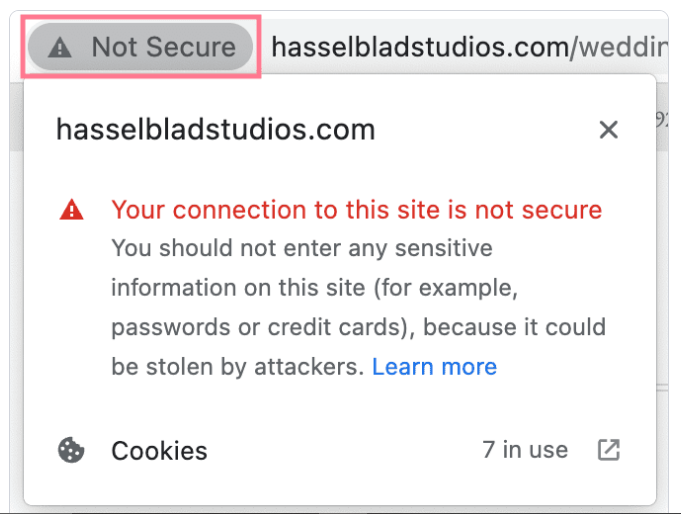

Since Google announced HTTPS as a ranking signal in 2014, many websites have transitioned from HTTP to HTTPS. HTTPS is the improved version of HTTP that encrypts information exchange with websites. This protects sensitive information like passwords, credit card details, and personal data from being compromised.

You can check if your website uses HTTPS by entering the URL into the search bar and opening it.

If you see a lock icon as displayed above in the address bar, it confirms that your site uses HTTPS.

If you see a ‘Not Secure’ warning like below, you’re not using HTTPS.

You can rectify this by adding an SSL certificate to authenticate and encrypt all connections to your site. This makes your website more trusted by web users and Google.

5. XML Sitemaps

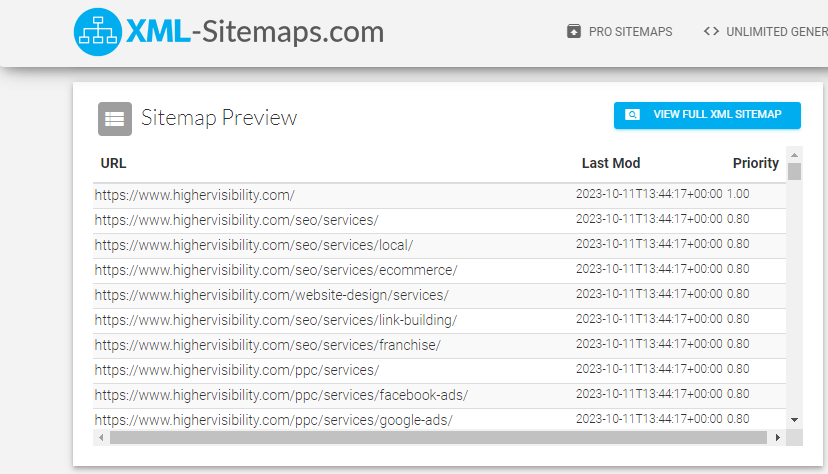

XML sitemaps are files consisting of links to all your web pages. A sitemap allows search engines to index new pages from your website, and you can automatically generate it via XML-Sitemaps.com or plugins like Yoast Rankmath. It’ll look like this:

After generating your sitemap, submit the file to search engines for crawling and indexing through web administrators like Google Search Console and Bing Webmaster Tools.

Any page not included on a sitemap cannot be crawled and displayed in search results, so you must always keep your sitemap updated.

6. Robots.txt

A robots.txt file contains code directing search engine bots on which pages should and should not be crawled for indexing. It helps you guide search engines to sitemaps to prevent duplicate pages and sensitive content. All these help you manage web crawling and indexing better to control search engine behavior on your website. Here are some mistakes to avoid when creating a robots.txt file.

- Not Placing the Robots.txt File in the Root Directory: The correct location for your robots.txt file is samplesite.com/robots.txt. Not placing the robots.txt file in the root directory can prevent search engines from identifying and adhering to your crawl directives. This may harm your site’s visibility and indexation in search results.

- Putting ‘NoIndex’ in Robots.txt: the noindex tag in the robots.txt file was discontinued in 2019, so if you want to prevent some pages from being indexed, use a meta robots tag. This ensures that specific pages are indexed according to your request.

- Not Including the Sitemap URL: Adding a sitemap URL, such as sitemap: https://www.samplesite.com/sitemap.xml, lets Google discover your sitemap easily. Not including the sitemap URL in the robots.txt or website configuration can hinder Google from efficiently crawling and indexing your site. This will reduce visibility in search results.

- Ignoring Case Sensitivity: Codes are case-sensitive, so writing each program line accurately is essential.

7. Eliminating Duplication: The Canonical Tag

A single page with two different URLs results in duplicate content, and when Google bots discover duplicate content, they automatically select one to promote for visibility.

To ensure that the content with higher authority and ranking potential is selected, you can add a canonical tag to signal the preferred page. According to Adam Heitzman, Managing Partner at HigherVisibility, “canonical tags help indicate preferred versions” of your content.

8. Schema Markup

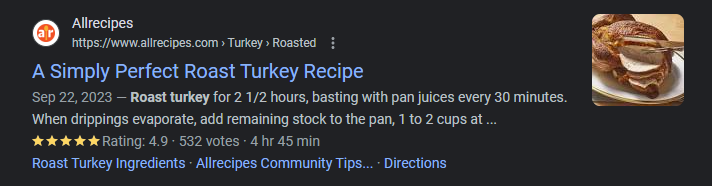

Schema markup, or structured data, is the code Google interprets to understand your webpage content. It provides specific details that enable Google to display additional information in search results. These could include ratings, images, price, video, in-stock or out-of-stock tags, recipe, music release date, etc.

You can maximize schema makeup by enhancing rich snippets and CTR. Adding rich snippets to your web content does not affect your site’s ranking directly. However, it makes your page appear more appealing and informative. For example, schema markup can structure FAQs or Q&A sections to appear directly in the search results. It potentially answers a user’s query right from the SERP and can increase clickthrough rates.

Here’s an example on a food recipe website:

As you can see, the rich snippets give context to the webpage, providing details on the process for making a “perfect roast turkey.”

Rich snippets are great for finance, educational, food recipes, music, travel, and e-commerce pages. Users are more likely to narrow down on websites with specific information about their search query than those without them. By leveraging rich snippets, you position your website to gain more clicks and, ultimately, more leads.

9. Fixing Broken Links

Incomplete links or links to pages that don’t exist anymore are common reasons that cause links to ‘break.’ You need to identify these links by auditing your website and fixing any broken links.

You can use the Google Search Console to generate a report about technical issues your website might have, including broken links. You can also use a third-party resource like Semrush Backlink Audit Tool to perform a site audit and identify broken links.

Here are important tips for fixing broken links:

- Remove the link if it leads to a deleted or empty page.

- Use a 301 redirect to move visitors from the page with the broken link to a new one.

- If the link is external, send a message to the domain holder to see if they are willing to go in on their end and fix the link.

- Edit the link to its correct format if it was broken due to typographical errors.

10. Optimal URL Structures

Your URL is the link to your website and the web pages for your content. Your website URLs should be simple, as short URLs rank better than complex and longer ones.

Users will likely remember simpler URLs, so ensure your links are concise, readable, and understandable for better user experience and SEO.

Here are common pitfalls to avoid when optimizing your URLs:

- Avoid using overly complex URLs, as they can cause problems for crawlers.

- Don’t stuff URLs with keywords, as it could counterintuitively affect your site’s ranking performance.

- Using uppercase in URLs makes them hard to read; lowercase is usually preferred.

- Avoid using dynamic parameters in your URLs, such as session IDs and timestamps, which contribute to longer and more complex URLs.

11. Site Depth

Site depth is the number of clicks it takes to go from the homepage to the farthest page on a website. The homepage is the strongest page and is tagged 0, while categories and subcategories are tagged at levels 1 and 2, respectively—the more the subpages, the deeper the site depth.

The general SEO rule for site depth is to keep it below 4. Web pages at deeper site depth are usually associated with poorer SEO performance due to the difficulty of being crawled by search engines. To ensure that all your pages are easily accessible, crawled, and indexed, keep them between a site depth of 1 to 3.

12. Image Optimization

Optimizing the images on your web pages can improve your site’s SEO performance. You can use images to improve clickthrough rates and get better ranking through featured snippets. On top of that, if you sell physical products, your target customers are more likely to search for them in the images section. Regardless of your business model, image SEO is necessary to speed up your website’s loading time, attract leads, and gain more visibility online.

Here are some tips on how to optimize your images for SEO:

- Resize images to smaller sizes to increase load time.

- Add keyword-rich alt text for visually impaired readers in line with SEO.

- Name image files (right from the computer) using keywords rather than irrelevant numbers and symbols. For example, “recipe for roasted turkey,” not “IMG 234”.

- Use the right format to ensure the image displays clearly; PNG is best for images with transparent backgrounds, while JPG is preferred for large images resized to preserve quality.

13. JavaScript and SEO

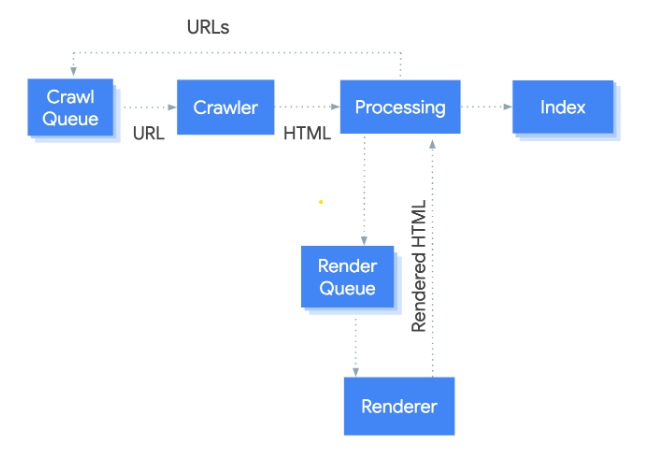

Javascript is a programming language embedded into all interactive websites. It’s responsible for the pop-up forms, image display, audio, video & animation play, and site responsiveness. Web pages with few interactive features tend to have faster loading time, but if your website is javascript-heavy, you may need to take extra measures to improve your website’s speed.

You can ensure your site’s javascript is SEO-friendly by using server-side rendering frameworks like Next.js, Nuxt.js, or Universal Angular. These frameworks render the HTML version of the web pages on your server and send it to the browser, making it easier for search engines to crawl and index your content. This reduces the time it takes for a page’s content to load and lessens the occurrence of layout shifts, which negatively impacts the user experience.

14. Core Web Vitals

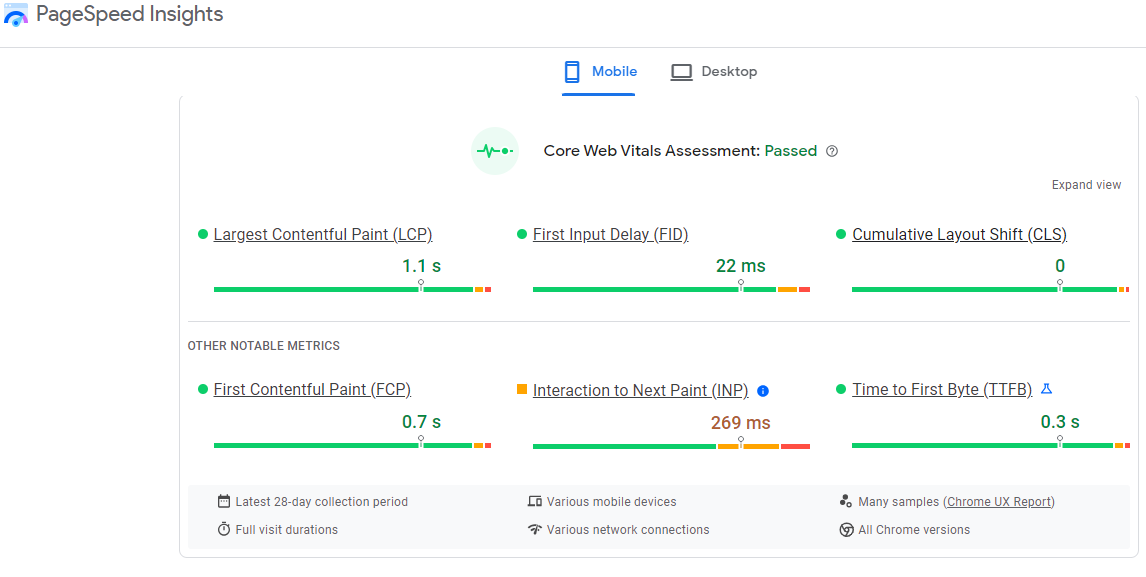

Core web vitals are metrics that Google uses to rate a web page’s user experience. There are three core web vitals: the Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS).

Largest Contentful Paint (LCP) is the amount of time the largest content element on a website displays fully. Aim at keeping your web page’s LCD below 2.5 seconds for optimal performance.

First Input Delay (FID) is the duration it takes for the browser to respond to any interaction with an element on a website. If the time goes beyond 100 milliseconds, it signals a need for improvement.

Cumulative Layout Shift (CLS) is how often users experience unexpected layout shifts while viewing or interacting with your website.

Layout shifts can occur in unexpected text or link location shifts, leading to an unsatisfying user experience. When such occurrences exceed 0.1, you should work on lessening the effect.

Core web vitals are important metrics Google algorithms look for to rank websites based on user experience performance. You can measure your website’s core web vitals on Google’s PageSpeed Insights page.

15. Keeping Up with Algorithm Changes

Search algorithms have evolved from simple keyword matching to natural language processing to understand search intents.

The 90s saw the rise of search engines like AltaVista and Lycos that required users to input specific terms to find relevant results. But when Google launched in 1998, it brought a new approach to responding to search queries.

Google introduced Pagerank, which helped users identify websites with high domain authority. It also incorporated natural language processing to understand users’ search intent from their queries.

Today, Google uses machine learning to rank results more accurately and personalize the search experience for each user. To help websites attract higher ranking and visibility, Google releases algorithm updates featuring changes to how it ranks content.

Using this information, you can improve your SEO strategy accordingly. Keep tabs on Google Search Central Blog and communities like Webmasterworld and Reddit r/SEO to stay updated with the latest insights on algorithm changes.

Integrating Technical SEO with Other Forms of SEO

Technical SEO will help improve your website’s architecture and user experience; it’ll enable easy crawling and indexing of your pages more efficiently. This added alongside strategic on-page SEO, ensures that your content is valuable for readers, meets search intent, and is rank-worthy.

Off-page SEO, at the end of the spectrum, enhances your website authority through external efforts. This is where you build your authority with the recognition of industry websites, social media marketing, and other brand mentions.When this holistic SEO strategy comes together, you can improve your domain authority, rank higher on SERPs, and get tangible results on all your SEO efforts. Technical SEO is a niche field, but you can take the trial and error process out of your “holistic SEO strategy” by hiring capable hands for your technical SEO needs.