SEO Spiders: What are Search Engine Crawl Spiders & How Do They Work?

Editor’s Note: This post was originally published in February of 2020 and has been updated in March 2025 for accuracy and comprehensiveness.

There are spiders on your website.

Don’t freak out! I’m not talking about real eight-legged spiders. I’m referring to Search Engine Optimization spiders. They’re the bots that make happen. Every major search engine uses spiders to catalog the perceivable internet. An effective tool for this purpose is the SEO Spider Tool, which can efficiently crawl both small and large websites, analyze results in real-time, and facilitate various SEO audits, including localization checks and broken link detection.

It is through the work of these spiders, sometimes referred to as crawl spiders or crawlers, that your website is ranked on popular search engines like Google, Bing, Yahoo, and others.

Of course, Google is the big dog of the search engine world, so when optimizing a web site, it’s best to keep Google’s spiders in mind most of all.

But what are search engine crawl spiders?

The crux of it is simple: In order to rank highly on search engine results pages, you have to write, design, and code your website to appeal to them.

That means you have to know what they are, what they’re looking for, and how they work.

Armed with that information, you’ll be able to optimize your site better, knowing what the most significant search engines in the world are seeking.

Let’s get into it.

What Are Search Engine Spiders?

Before you can understand how a web crawler works and how you can appeal to it, you first have to know what they are.

Search engine spiders are the foot soldiers of the search engine world. A search engine like Google has certain things that it wants to see from a highly ranked site. The crawler moves across the web and carries out the will of the search engine.

A crawler is simply a piece of software guided by a particular purpose. For spiders, that purpose is the cataloging of website information.

Google’s spiders crawl across websites, gathering and storing data. They have to determine not only what the page is but the quality of its content and the subject matter contained within.

They do this for every site on the web. To put that in perspective, there are 1.94 billion websites active as of 2019, and that number rises every day. Every new site that pops up has to be crawled, analyzed, and cataloged by spider bots.

Meta robots directives play a crucial role in guiding these search engine spiders. They provide instructions on how to interact with different pages, ensuring that essential URLs are not blocked from indexing.

The search engine crawlers then deliver gathered data to the search engine for indexing. That information is stored until it is needed. When a Google search query is initiated, the results and rankings are generated from that index.

Since 2009, we have helped thousands of businesses grow their online leads and sales strategically. Let us do it for you!

Understanding Search Engine Bots

Search engine bots, also known as spiders or crawlers, are the unsung heroes of the internet. These software programs tirelessly scan and index the web to ensure that when you search for something, you get the most relevant results. Think of them as digital librarians, constantly updating the catalog to keep everything in order.

These bots are designed to mimic human behavior, navigating through websites just like you would. They gather data to improve search engine results pages (SERPs), ensuring that the most relevant and high-quality content rises to the top. But how do they decide what’s relevant? That’s where algorithms come into play. These complex formulas take into account factors like content quality, link equity, and user experience to determine a website’s relevance and authority.

Understanding how search engine bots work is crucial for anyone looking to optimize their website. By knowing what these bots are looking for, you can tailor your content to meet their criteria, improving your chances of ranking higher in search results. There are two main types of search engine bots: general-purpose bots, which scan the entire web, and specialized bots, which focus on specific tasks like indexing images or videos. Each type plays a vital role in the search engine ecosystem.

How Does a Crawler Work?

A crawler is a complicated piece of software. You have to be if you’re going to be cataloging the entire web. But how does this bot work?

First, the crawler visits a web page looking for new data to include in the search engine index. That is its ultimate goal and the reason for its existence. But a lot of work goes into this search engine bot’s task. One of the significant challenges is to effectively crawl JavaScript websites, as they often contain dynamically generated content that requires specific configurations to render and crawl properly.

Step 1: Spiders Check Out Your Robots.txt File

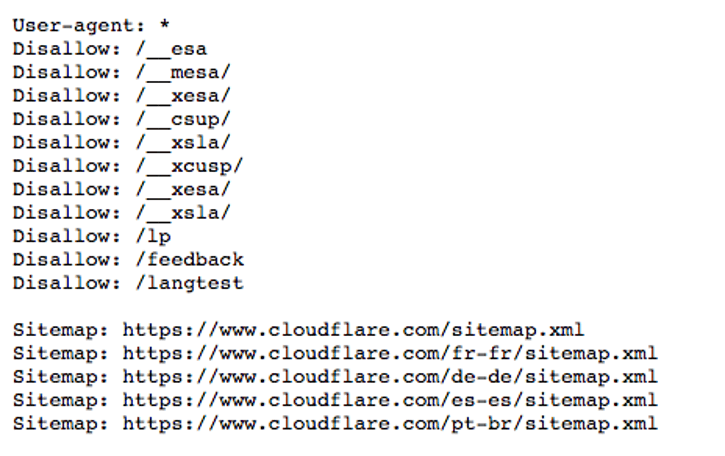

When Google’s spiders arrive at a new website, they immediately download the site’s robots.txt file. The robots.txt file gives the spiders rules about what pages can and should be crawled on the site. It also lets them look through sitemaps to determine the overall layout of the pages and how they should be cataloged.

Robots.txt is a valuable piece of the SEO puzzle, yet it’s something that a lot of website builders don’t give you direct control over. There are individual pages on your site that you might want to keep from Google’s spiders.

Can you block your website from getting crawled?

You absolutely can, using robots.txt.

But why would you want to do this?

Let’s say you have two very similar pages with a lot of duplicate content. Google hates duplicate content, and it’s something that can negatively impact your ranking. That’s why it’s good to be able to edit your robots.txt file to blind Google to specific pages that might have an unfortunate effect on your SEO score.

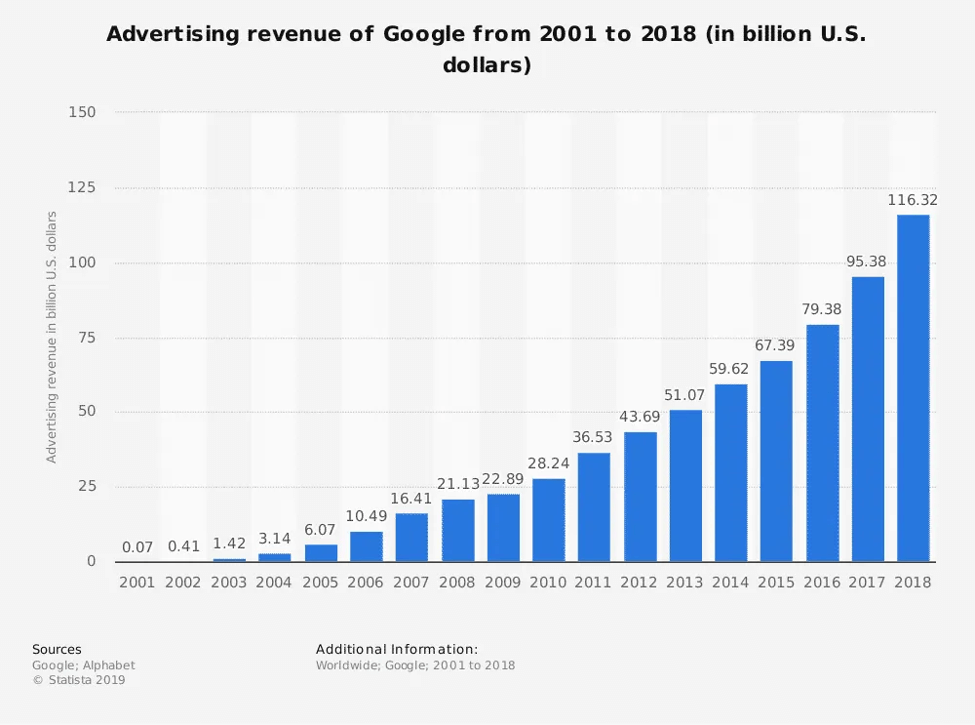

Google is super particular about things like duplicate content because its business model is dedicated to providing accurate and quality search results. That’s why their search algorithm is so advanced. If they’re providing the best information possible, customers will continue to flock to their platform to find what they’re looking for.

By delivering quality search results, Google attracts consumers to their platform, where they can show them ads (which are responsible for 70.9% of Google’s revenue).

So, if you think that the spiders are too critical of things like duplicate content, remember that quality is the chief concern for Google:

- Quality suggestions lead to more users.

- More users lead to increased ad sales.

- Increased ad sales lead to profitability.

Step 2: Spiders Check Your Links

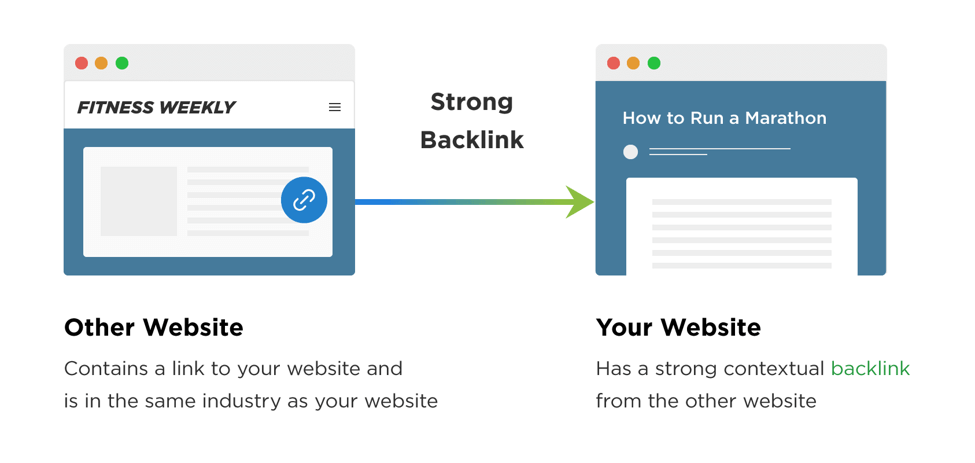

One major factor that spiders hone in on is linking. Spiders can not only recognize hyperlinks, but they can follow them as well. They use your site’s internal links to move around and continue cataloging. Internal linking is essential for a lot of reasons, but they also create an easy path for search bots to follow.

Spiders will also take careful note of what outbound links, along with what third party sites are linking to yours. When we say that link building is one of the most critical elements of an SEO plan, we’re telling the truth. You have to create an internal web of links between your pages and blog posts. You also have to make sure you’re linking to outside sources.

But beyond all of that, you have to make sure that external sites that are in high favor with Google and relevant to your site are linking to you.

As we mentioned in the last section, Google needs to know that it is giving high-quality and legitimate suggestions to searchers in order to maintain its dominance and, by extension, profitability.

When a site links to you, think of it as a letter of recommendation. If you’re applying for a job as a nurse, you will come prepared with letters of recommendation from previous hospital administrators and medical professionals with whom you’ve worked.

If you show up with a short letter from your mailman and your dog groomer, they may have beautiful things to say about you, but their word is not going to carry a lot of weight in the medical field.

SEO is a job interview with Google.

You’re interviewing for the top spots in your industry every second that you’re online. Google’s spiders are the HR representatives conducting the interview and checking your sources before reporting back to their higher-ups and deciding your eligibility.

Step 3: Spiders Check Your Copy

A common misconception about search engine spiders is that they just come onto the page and count all of your keywords.

While keywords play a part in your rank, spiders do a lot more than that.

Crawling JavaScript rich websites presents specific challenges for search engine spiders, as they need to effectively crawl dynamic content. This can impact the crawling speed and intensity, especially for larger websites.

SEO is all about tweaks to your copy. Those tweaks are made in an attempt to impress Google’s spiders and give them what they’re looking for.

But what are search engine spiders looking for when they review your website copy?

They’re trying to determine three key factors.

- The relevance of your content. If you’re a dental website, are you focusing on dental information? Are you getting off-topic on random tangents or dedicating areas of your site to other unrelated themes? If so, Google’s bots will become confused as to how they should rank you.

- The overall quality of your content. Google spiders are sticklers for quality writing. They want to make sure that your text is in keeping with Google’s high standards. Remember, Google’s recommendation carries weight, so it’s not just about how many keywords you can stuff into a paragraph. The spiders want to see quality over quantity.

- The authority of your content. If you’re a dental website, Google needs to make sure that you’re an authority in your industry. If you want to be the number one search term for a specific keyword or phrase, then you have to prove to Google’s spiders that you are the authority on that particular topic.

If you include structured data, also known as schema markup, into the code of your site, you’ll earn extra points with Google’s spiders. This coding language gives the spiders more information about your website and helps them list you more accurately.

It’s also never a good idea to try and trick Google’s spiders. They’re not as dumb as a lot of SEO marketers seem to think. Spiders can quickly identify black-hat SEO tactics.

Black-hat SEO encompasses immoral tactics used to try and trick Google into giving a site a higher ranking without creating quality content and links.

An example of a black hat SEO tactic would be keyword stuffing, where you’re piling keywords nonsensically into a page. Another tactic that black hat SEO firms use is creating through dummy pages that contain a link back to your site.

And a decade ago, these tactics worked. But since then Google has gone through many updates, and its spider bots are now capable of identifying black hat tactics and punishing the perpetrators.

Spiders index black-hat SEO information, and penalties can be issued if your content is proven to be problematic.

These penalties can be something small yet effective, like downranking the site, or, something as severe as a total delisting, in which your site vanishes from Google altogether.

Step 4: Spiders Look At Your Images

Spiders will take an accounting of your site’s images as they crawl the web. However, this is an area where Google’s bots need some extra help. Spiders can’t just look at a picture and determine what it is. It understands that there’s an image there, but it is not advanced enough to get the actual context.

That’s why it’s so important to have alt tags and titles associated with every image. If you’re a cleaning company, you likely have pictures showing off the results of your various office cleaning techniques. Unless you specify that the image is of an office cleaning technique in the alt tag or title, the spiders aren’t going to know.

Since 2009, we have helped thousands of businesses grow their online leads and sales strategically. Let us do it for you!

Step 5: Spiders Do It All Again

A Google spider’s job is never done. Once it is finished cataloging a site, it moves on and will eventually recrawl your site to update Google on your content and optimization efforts.

These bots are continually crawling to find new pages and new content. You can indirectly determine the frequency in which your pages are recrawled. If you’re regularly updating your site, you’re giving Google a reason to catalog you again. For javascript rich websites, frequent recrawling is essential due to dynamic content updates. That’s why consistent updates (and blog posts) should be a part of every SEO plan.

SEO Spider Tools and Software

If search engine bots are the librarians of the internet, then SEO spider tools are the magnifying glasses that help you see what they see. These tools are designed to mimic the behavior of search engine bots, crawling through your website to identify areas for improvement. They provide invaluable insights into your site structure, content, and technical SEO issues, helping you fine-tune your online presence.

Popular SEO spider tools like Screaming Frog SEO Spider, Netpeak Spider, and Ahrefs offer a range of features to help you optimize your site. For instance, Screaming Frog SEO Spider can crawl your website to find broken links, optimize meta descriptions, and analyze your site structure. These tools are like having a mini search engine bot at your disposal, giving you the information you need to make your site more search engine-friendly.

By leveraging SEO spider tools, you can gain a competitive edge in search engine rankings. These tools help you identify and fix issues that could be holding your site back, ensuring that you stay ahead of the competition. Whether you’re looking to optimize your meta descriptions, find broken links, or improve your site structure, SEO spider tools are an essential part of your SEO toolkit.

How Do You Optimize Your Site for SEO Spiders?

To review, there are several steps that you can take to make sure that your site is ready for Google’s spiders to crawl.

Step 1: Have a clear site hierarchy

Site structure is crucial to ranking well in the search engines. Making sure pages are easily accessible within a few clicks allows crawlers to access the information they need as quickly as possible.

Step 2: Do Keyword Research

Understand what kind of search terms your audience is using and find ways to work them into your content.

Step 3: Create Quality Content

Write clear content that demonstrates your authority on a subject. Remember not to keyword stuff your text. Stay on topic and prove both your relevance and expertise.

Step 4: Build Links and Find Broken Links

Create a series of internal links for Google’s bots to use when making their way through your site. Build backlinks from outside sources that are relevant to your industry to improve your authority.

Step 5: Optimize Meta Descriptions and Title Tags

Before a web crawler makes its way onto your page’s content, it will first read through your page title and metadata. Make sure that these are optimized with keywords. The need for quality content extends to here as well.

Step 6: Add Alt Tags For All Images

Remember, the spiders can’t see your images. You have to describe them to Google through optimized copy. Use up the allowed characters and paint a clear picture of what your pictures are showcasing.

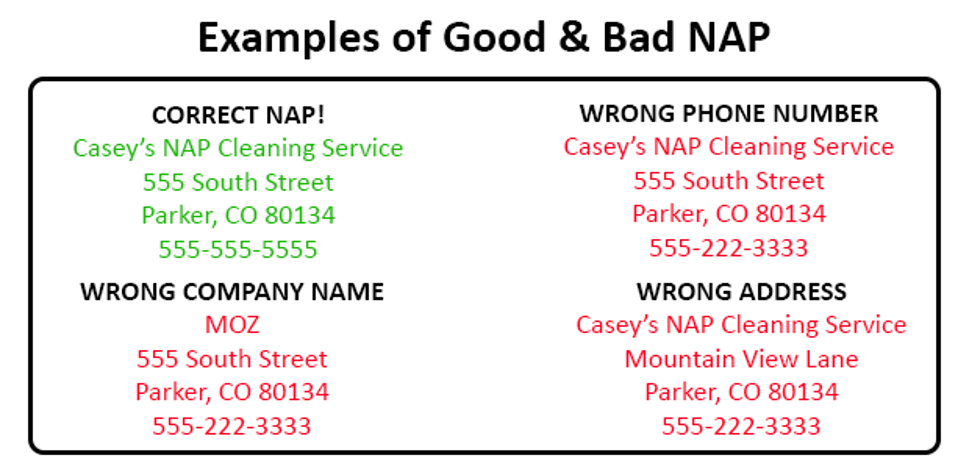

Step 7: Ensure NAP Consistency

If you’re a local business, you have to make sure that your Name, Address, and Phone Number not only appear on your site and throughout various third-party platforms, but that they are consistent everywhere. That means that no matter where you’re listing a NAP citation, the information should be identical.

That also applies to spelling and abbreviations. If you’re on Main Street, but you want to abbreviate to Main St., make sure you’re doing that everywhere. A crawler will notice inconsistencies, and it will hurt your brand legitimacy and SEO score.

Step 8: Update Your Site Regularly

A constant stream of new content will ensure that Google always has a reason to crawl your site again and update your score. Blog posts are a perfect way to keep a steady stream of fresh content on your website for search engine bots to crawl over.

Using SEO Tools for Better Optimization

SEO tools are the Swiss Army knives of the digital marketing world. They are essential for optimizing your website content and improving your search engine rankings. By using these tools, webmasters and SEO professionals can identify technical SEO issues, optimize meta descriptions, and analyze site structure, all of which are crucial for a successful SEO strategy.

Popular SEO tools like Google Search Console, Ahrefs, and SEMrush offer a wide range of features to help you improve your online presence. Google Search Console, for example, provides insights into how search engine bots interact with your site, helping you identify and fix crawl errors. Ahrefs and SEMrush offer comprehensive tools for keyword research, backlink analysis, and competitive analysis, giving you a well-rounded view of your SEO performance.

By leveraging these SEO tools, you can stay ahead of the competition and improve your search engine rankings. These tools provide valuable insights into search engine bot behavior and website crawlability, helping you make informed decisions about your SEO strategy. Whether you’re looking to optimize your meta descriptions, improve your site structure, or gain a better understanding of your SEO performance, these tools are indispensable for any website owner looking to drive more traffic and revenue to their site.

In Conclusion

A strong understanding of SEO spiders and search engine crawling can have a positive impact on your SEO efforts. You need to know what they are, how they work, and how you can optimize your site to fit what they’re looking for.

Ignoring SEO spider crawlers can be the fastest way to ensure that your site wallows in obscurity. Every query is an opportunity. Appeal to the crawlers, and you’ll be able to use your digital marketing plan to rise up the search engine ranks, achieving the top spot in your industry and staying there for years to come.

Since 2009, we have helped thousands of businesses grow their online leads and sales strategically. Let us do it for you!